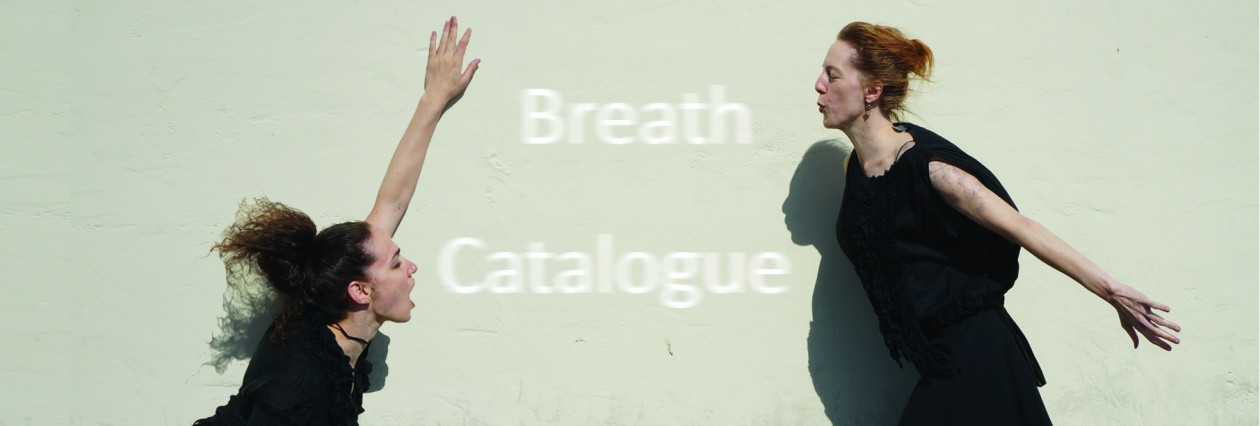

Here is an excerpt from the interview with Katharine Hawthorne that was first published in Sciart Magazine:

Ben Gimpert: The sensor measures four things: the diaphragmatic or chest pressure placed on the device, as well as three dimensions of acceleration. These four numbers are sampled about thirty times per second, and then sent over Bluetooth radio to a laptop.

The famous Joy Division album cover. Smoky particles at a rave in the nineties. The dancers wanting their breath to leave an almost-real residue in the space. In each case the breath is not visualized literally, because that would be boring. If the pressure sensor has a low reading, suggesting that Kate or Megan is at an inhale, the code might move the frequency blanket imagery in a snapped wave upward. Or invert the breath by sending the neon bars outwards.

Who is driving the collaboration? Did the dancers/choreographers suggest modes of interaction and then the visuals develop to suit the choreography? Or did the possible visualizations shape the movement landscape?

I have seen a lot of contemporary dance where an often-male technologist projects his video onto usually-female dancers. This is both sloppy politics, and pretty lazy. I wanted there to be a genuine feedback loop between what my code would project in the space, and how Kate and Megan move. So I was in the dance studio with the dancers throughout the creation of the piece.

In Breath Catalogue, we developed a custom piece of software specifically for the piece. This custom approach took a hardware prototype like the sensor and avoided a proprietary (commercial) software dependency. In a very practice-as-research sense, I would often make live changes to the code while in the studio. The Breath Catalogue visualizations run in a web-browser, so it was easy for Kate and Megan to run them outside of the studio. at home. We are planning to release the Breath Catalogue software under an open source license, to support the community. (Some utility is already released on Github.)

There’s a moment in the piece when the Megan takes off the sensor and transfers it to Kate. Is their breath data significantly different? Also, has this moment ever caused any technical difficulties? Does the sensor have to recalibrate to a different body?

Yes, Kate and Megan each have a distinct style of breathing. If you are adventurous, this can be teased out of the breath data we posted online. In this piece, Megan’s breath is usually more staccato and Kate’s sustained. The sensor reconnects at several points, which is technically challenging. In the next iteration of Breath Catalogue, we will be using multiple sensors worn by one or more dancers. The visualization software that I built already supports this, but it is trickier from a hardware standpoint.

In your experience, how much of the data visualizations translate to the audience? How easy is it for an untrained eye to “get” what is going on and understand the connection between the performers’s breathing and the images?

It turns out to be quite difficult. We added a silent and dance-less moment at the beginning of the piece so the audience could understand the dancer’s breath’s direct effect on the viz. Yet, even with that, the most common question I have been asked about my work with Breath Catalogue was about the literal representation of the breath. As contemporary dance audiences, we are accustomed to referential and metaphorical movement. However I think visualizations are still expected to be literal, like an ECG. Or just decorative.

What is your favorite part of the piece?

In the next-to-last scene, the wireless pocket projector was reading live sensor data from the dancer via the attached mobile phone. Which was pretty fucking tough from a technical standpoint. Also the whimsical moment when Kate watches and adjusts her breath according to the baseline of that Police song. And when Megan grabs the pocket project for the film noir, and then bolts.

If you had the time to rework or extend any section, which would it be?

In one scene we remix the live breath data with data from earlier in that evening’s show. I would have made this more obvious to the audience, because it could be a pretty powerful way to connect breath and time passing.